What Is The Big Data Pdf

Big data projects and Informatics, US Xpress processes and analyses this data to optimise fleet usage, saving millions of dollars a year.(10) Even governments, including those of the UK and the US, are embracing Big Data. It has been suggested that if the UK government capitalised on Big Data.

Growth of and digitization of global information-storage capacity'Big data' is a field that treats ways to analyze, systematically extract information from, or otherwise deal with that are too large or complex to be dealt with by traditional. Data with many cases (rows) offer greater, while data with higher complexity (more attributes or columns) may lead to a higher. Big data challenges include, search, updating, and data source. Big data was originally associated with three key concepts: volume, variety, and velocity. Other concepts later attributed to big data are veracity (i.e., how much noise is in the data) and value.Current usage of the term big data tends to refer to the use of, or certain other advanced data analytics methods that extract value from data, and seldom to a particular size of data set. 'There is little doubt that the quantities of data now available are indeed large, but that's not the most relevant characteristic of this new data ecosystem.' Analysis of data sets can find new correlations to 'spot business trends, prevent diseases, combat crime and so on.'

Scientists, business executives, practitioners of medicine, advertising and alike regularly meet difficulties with large data-sets in areas including,. Scientists encounter limitations in work, including, complex physics simulations, biology and environmental research.Data sets grow rapidly, in part because they are increasingly gathered by cheap and numerous information-sensing devices such as, aerial , software logs, microphones, (RFID) readers. The world's technological per-capita capacity to store information has roughly doubled every 40 months since the 1980s; as of 2012, every day 2.5 (2.5×10 18) of data are generated. Based on an IDC report prediction, the global data volume will grow exponentially from 4.4 to 44 zettabytes between 2013 and 2020. By 2025, IDC predicts there will be 163 zettabytes of data. One question for large enterprises is determining who should own big-data initiatives that affect the entire organization., desktop statistics and software packages used to visualize data often have difficulty handling big data.

The work may require 'massively parallel software running on tens, hundreds, or even thousands of servers'. What qualifies as being 'big data' varies depending on the capabilities of the users and their tools, and expanding capabilities make big data a moving target. 'For some organizations, facing hundreds of gigabytes of data for the first time may trigger a need to reconsider data management options. For others, it may take tens or hundreds of terabytes before data size becomes a significant consideration.' Contents.Definition The term has been in use since the 1990s, with some giving credit to for popularizing the term.Big data usually includes data sets with sizes beyond the ability of commonly used software tools to, manage, and process data within a tolerable elapsed time. Big data philosophy encompasses unstructured, semi-structured and structured data, however the main focus is on unstructured data. Big data 'size' is a constantly moving target, as of 2012 ranging from a few dozen terabytes to many of data.Big data requires a set of techniques and technologies with new forms of integration to reveal insights from datasets that are diverse, complex, and of a massive scale.A 2016 definition states that 'Big data represents the information assets characterized by such a high volume, velocity and variety to require specific technology and analytical methods for its transformation into value'.

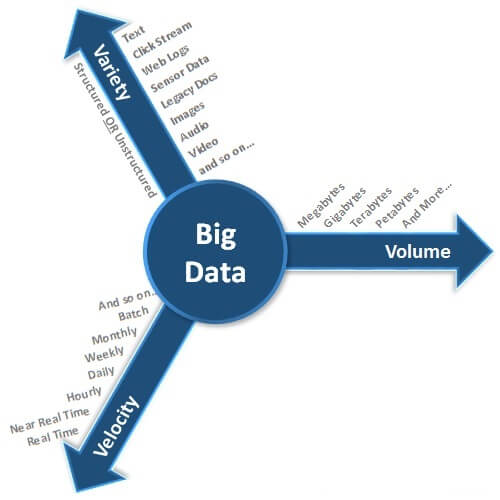

Similarly, and Haenlein define big data as 'data sets characterized by huge amounts (volume) of frequently updated data (velocity) in various formats, such as numeric, textual, or images/videos (variety).' Additionally, a new V, veracity, is added by some organizations to describe it, revisionism challenged by some industry authorities. The three Vs (volume, variety and velocity) has been further expanded to other complementary characteristics of big data:.: big data often doesn't ask why and simply detects patterns.: big data is often a cost-free byproduct of digital interaction A 2018 definition states 'Big data is where parallel computing tools are needed to handle data', and notes, 'This represents a distinct and clearly defined change in the computer science used, via parallel programming theories, and losses ofsome of the guarantees and capabilities made by.' The growing maturity of the concept more starkly delineates the difference between 'big data' and ':. Business Intelligence uses with data with high information density to measure things, detect trends, etc. Big data uses and concepts from to infer laws (regressions, nonlinear relationships, and causal effects) from large sets of data with low information density to reveal relationships and dependencies, or to perform predictions of outcomes and behaviors. Characteristics.

Shows the growth of big data's primary characteristics of volume, velocity, and varietyBig data can be described by the following characteristics: Volume The quantity of generated and stored data. The size of the data determines the value and potential insight, and whether it can be considered big data or not. Variety The type and nature of the data. This helps people who analyze it to effectively use the resulting insight. Big data draws from text, images, audio, video; plus it completes missing pieces through data fusion. Velocity In this context, the speed at which the data is generated and processed to meet the demands and challenges that lie in the path of growth and development.

Big data is often available in real-time. Compared to small data, big data are produced more continually. Two kinds of velocity related to big data are the frequency of generation and the frequency of handling, recording, and publishing. Veracity It is the extended definition for big data, which refers to the data quality and the data value. The of captured data can vary greatly, affecting the accurate analysis.Data must be processed with advanced tools (analytics and algorithms) to reveal meaningful information. For example, to manage a factory one must consider both visible and invisible issues with various components. Information generation algorithms must detect and address invisible issues such as machine degradation, component wear, etc.

On the factory floor. Architecture Big data repositories have existed in many forms, often built by corporations with a special need. Commercial vendors historically offered parallel database management systems for big data beginning in the 1990s.

For many years, WinterCorp published the largest database report. Corporation in 1984 marketed the parallel processing system. Teradata systems were the first to store and analyze 1 terabyte of data in 1992.

Hard disk drives were 2.5 GB in 1991 so the definition of big data continuously evolves according to Kryder's Law. Teradata installed the first petabyte class RDBMS based system in 2007. As of 2017, there are a few dozen petabyte class Teradata relational databases installed, the largest of which exceeds 50 PB.

Systems up until 2008 were 100% structured relational data. Since then, Teradata has added unstructured data types including XML, JSON, and Avro.In 2000, Seisint Inc. (now ) developed a C-based distributed file-sharing framework for data storage and query. The system stores and distributes structured, semi-structured, and across multiple servers. Users can build queries in a C called. ECL uses an 'apply schema on read' method to infer the structure of stored data when it is queried, instead of when it is stored. In 2004, LexisNexis acquired Seisint Inc.

And in 2008 acquired and their high-speed parallel processing platform. The two platforms were merged into (or High-Performance Computing Cluster) Systems and in 2011, HPCC was open-sourced under the Apache v2.0 License. Was available about the same time.and other physics experiments have collected big data sets for many decades, usually analyzed via (supercomputers) rather than the commodity map-reduce architectures usually meant by the current 'big data' movement.In 2004, published a paper on a process called that uses a similar architecture. The MapReduce concept provides a parallel processing model, and an associated implementation was released to process huge amounts of data. With MapReduce, queries are split and distributed across parallel nodes and processed in parallel (the Map step). The results are then gathered and delivered (the Reduce step).

The framework was very successful, so others wanted to replicate the algorithm. Therefore, an of the MapReduce framework was adopted by an Apache open-source project named. Was developed in 2012 in response to limitations in the MapReduce paradigm, as it adds the ability to set up many operations (not just map followed by reducing).is an open approach to information management that acknowledges the need for revisions due to big data implications identified in an article titled 'Big Data Solution Offering'. The methodology addresses handling big data in terms of useful of data sources, in interrelationships, and difficulty in deleting (or modifying) individual records.2012 studies showed that a multiple-layer architecture is one option to address the issues that big data presents. A architecture distributes data across multiple servers; these parallel execution environments can dramatically improve data processing speeds. This type of architecture inserts data into a parallel DBMS, which implements the use of MapReduce and Hadoop frameworks.

This type of framework looks to make the processing power transparent to the end user by using a front-end application server.The allows an organization to shift its focus from centralized control to a shared model to respond to the changing dynamics of information management. Bus wrapped with Big data parked outside.Big data has increased the demand of information management specialists so much so that, and have spent more than $15 billion on software firms specializing in data management and analytics. In 2010, this industry was worth more than $100 billion and was growing at almost 10 percent a year: about twice as fast as the software business as a whole.Developed economies increasingly use data-intensive technologies. There are 4.6 billion mobile-phone subscriptions worldwide, and between 1 billion and 2 billion people accessing the internet. Between 1990 and 2005, more than 1 billion people worldwide entered the middle class, which means more people became more literate, which in turn led to information growth. The world's effective capacity to exchange information through telecommunication networks was 281 in 1986, 471 in 1993, 2.2 exabytes in 2000, 65 in 2007 and predictions put the amount of internet traffic at 667 exabytes annually by 2014. According to one estimate, one-third of the globally stored information is in the form of alphanumeric text and still image data, which is the format most useful for most big data applications.

This also shows the potential of yet unused data (i.e. In the form of video and audio content).While many vendors offer off-the-shelf solutions for big data, experts recommend the development of in-house solutions custom-tailored to solve the company's problem at hand if the company has sufficient technical capabilities. Government The use and adoption of big data within governmental processes allows efficiencies in terms of cost, productivity, and innovation, but does not come without its flaws. Data analysis often requires multiple parts of government (central and local) to work in collaboration and create new and innovative processes to deliver the desired outcome.collects all certificates status from birth to death. CRVS is a source of big data for governments.International development Research on the effective usage of (also known as ) suggests that big data technology can make important contributions but also present unique challenges to. Advancements in big data analysis offer cost-effective opportunities to improve decision-making in critical development areas such as health care, employment, crime, security, and and resource management.

Additionally, user-generated data offers new opportunities to give the unheard a voice. However, longstanding challenges for developing regions such as inadequate technological infrastructure and economic and human resource scarcity exacerbate existing concerns with big data such as privacy, imperfect methodology, and interoperability issues. Manufacturing Based on TCS 2013 Global Trend Study, improvements in supply planning and product quality provide the greatest benefit of big data for manufacturing. Big data provides an infrastructure for transparency in manufacturing industry, which is the ability to unravel uncertainties such as inconsistent component performance and availability. Predictive manufacturing as an applicable approach toward near-zero downtime and transparency requires vast amount of data and advanced prediction tools for a systematic process of data into useful information. A conceptual framework of predictive manufacturing begins with data acquisition where different type of sensory data is available to acquire such as acoustics, vibration, pressure, current, voltage and controller data. Vast amount of sensory data in addition to historical data construct the big data in manufacturing.

What Is Data Analysis Pdf

The generated big data acts as the input into predictive tools and preventive strategies such as and Health Management (PHM). Healthcare Big data analytics has helped healthcare improve by providing personalized medicine and prescriptive analytics, clinical risk intervention and predictive analytics, waste and care variability reduction, automated external and internal reporting of patient data, standardized medical terms and patient registries and fragmented point solutions.

Some areas of improvement are more aspirational than actually implemented. The level of data generated within healthcare systems is not trivial. With the added adoption of mHealth, eHealth and wearable technologies the volume of data will continue to increase. This includes data, imaging data, patient generated data, sensor data, and other forms of difficult to process data. Sabre tactical training center. There is now an even greater need for such environments to pay greater attention to data and information quality.

'Big data very often means ' and the fraction of data inaccuracies increases with data volume growth.' Human inspection at the big data scale is impossible and there is a desperate need in health service for intelligent tools for accuracy and believability control and handling of information missed. While extensive information in healthcare is now electronic, it fits under the big data umbrella as most is unstructured and difficult to use.

The use of big data in healthcare has raised significant ethical challenges ranging from risks for individual rights, privacy and autonomy, to transparency and trust. Education A study found a shortage of 1.5 million highly trained data professionals and managers and a number of universities including and, have created masters programs to meet this demand. Private bootcamps have also developed programs to meet that demand, including free programs like or paid programs like. In the specific field of marketing, one of the problems stressed by Wedel and Kannan is that marketing has several subdomains (e.g., advertising, promotions,product development, branding) that all use different types of data. Because one-size-fits-all analytical solutions are not desirable, business schools should prepare marketing managers to have wide knowledge on all the different techniques used in these subdomains to get a big picture and work effectively with analysts.Media To understand how the media utilizes big data, it is first necessary to provide some context into the mechanism used for media process. It has been suggested by Nick Couldry and Joseph Turow that in Media and Advertising approach big data as many actionable points of information about millions of individuals.

The industry appears to be moving away from the traditional approach of using specific media environments such as newspapers, magazines, or television shows and instead taps into consumers with technologies that reach targeted people at optimal times in optimal locations. The ultimate aim is to serve or convey, a message or content that is (statistically speaking) in line with the consumer's mindset. For example, publishing environments are increasingly tailoring messages (advertisements) and content (articles) to appeal to consumers that have been exclusively gleaned through various activities. Targeting of consumers (for advertising by marketers).: publishers and journalists use big data tools to provide unique and innovative insights and infographics., the British television broadcaster, is a leader in the field of big data.

Insurance Health insurance providers are collecting data on social 'determinants of health' such as food and, marital status, clothing size and purchasing habits, from which they make predictions on health costs, in order to spot health issues in their clients. It is controversial whether these predictions are currently being used for pricing. Internet of Things (IoT).

What Is The Big Data Movement

Further information:Big data and the IoT work in conjunction. Data extracted from IoT devices provides a mapping of device interconnectivity. Such mappings have been used by the media industry, companies and governments to more accurately target their audience and increase media efficiency. IoT is also increasingly adopted as a means of gathering sensory data, and this sensory data has been used in medical, manufacturing and transportation contexts., digital innovation expert who is credited with coining the term, defines the Internet of Things in this quote: “If we had computers that knew everything there was to know about things—using data they gathered without any help from us—we would be able to track and count everything, and greatly reduce waste, loss and cost. We would know when things needed replacing, repairing or recalling, and whether they were fresh or past their best.”Information Technology Especially since 2015, big data has come to prominence within as a tool to help employees work more efficiently and streamline the collection and distribution of (IT). The use of big data to resolve IT and data collection issues within an enterprise is called (ITOA). By applying big data principles into the concepts of and deep computing, IT departments can predict potential issues and move to provide solutions before the problems even happen.